Flask on AWS Serverless: A learning journey - Part 2

About 3 years ago I learnt some basic Python, which I've used almost exclusively to

With a title like that, you'd think we need lots of popcorn! In large enterprises, with titles like Enterprise Architect and Solution Architect, and each trying to prove their worth, it makes for lots of fun (rather documents written for the sole purpose of writing documents, that are outdated even before they written). Add to that, each one of them becoming an artist, and trying to draw architecture with shapes and lines, and the obviously the one in the centre is the most important!

When I was an Accenture, they ran really awesome internally led week long training courses at their global training centers in the UK and US, and we spent a long time discussing architecture. These were hands-on, in-depth courses. One of the opening talks that still vividly sticks with was about the infamous Tacoma Bridge collapse, why architecture matters. We then spent days on the -ilities.

But this article will just be a quick glimpse into a short (14 mins) keynote that Martin Fowler gave on Making Architecture Matter.

He starts off by saying what a bad rep Architecture has - about guys who haven't coded in over 10 years, holding on to the precious 'Architecture', thinking that its "above" programming some how. They more concerned about using their powers to say NO to projects that need to launch, simply so they can put their stamp of approval on it. They couldn't be more wrong.

He then tries to define what is Architecture, starting off with a typical IEEE definition, and then moving towards something more easily digestible like "expert developers working on that project have a shared understanding of the system design" and "things you need (or wish) you get right early on". Read Who Needs an Architect? for how the conversation started off with

“We shouldn’t interview anyone who has ‘architect’ on his resume.”

This perhaps is the succinct description of what an architect does:

.....be very aware of what’s going on in the project, looking out for important issues and tackling them before they become a serious problem. When I see an architect like this, the most noticeable part of the work is the intense collaboration. In the morning, the architect programs with a developer, trying to harvest some common locking code. In the afternoon, the architect participates in a requirements session, helping explain to the requirements people the technical consequences of some of their ideas in nontechnical terms—such as development costs. In many ways, the most important activity of Architectus Oryzus is to mentor the development team, to raise their level so that they can take on more complex issues. Improving the development team’s ability gives an architect much greater leverage than being the sole decision maker and thus running the risk of being an architectural bottleneck. This leads to the satisfying rule of thumb that an architect’s value is inversely proportional to the number of decisions he or she makes.

But the part that really got me was something that happened to me a few months back. A senior manager works into our developer space and starts ranting about how we need to be delivering more faster, and caring less about quality and "architecture".

We need to put less effort on quality so we can build more features for our next release

So my response, as Martin explains, incorrectly took the moral path of saying "we need to do it right, because..."

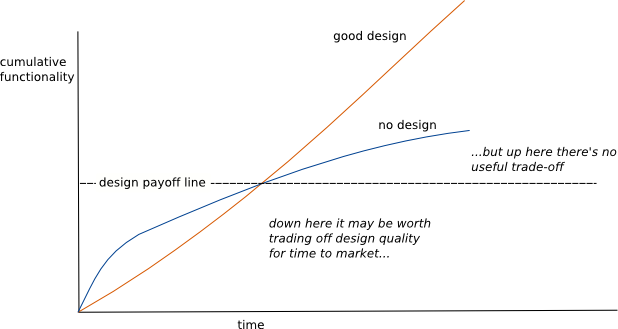

But the correct response, properly depicts what happens when you have the wrong vs the right design/architecture, from Martin's Design Stamina Hypothesis article

So below the imaginary line, there is some tradeoffs that can be made. Anything above that will just build up technical debt. This brings an interesting tie in to one of Googles SRE principles, that of Risk and Error budgets. The idea being that to build 100% reliable services as a general rule for all services is impractical and costly. There is a certain point at which, increasing the level of reliability is not noticeable to the end-user, or makes the service worse-off, because it becomes more difficult to release new features. So then, if you define a certain acceptable level of reliability, so 99%, you know have enough room to cater for failure, and for releasing new features. Its an acceptable level of risk. At this level then, coming back to Martin graph above, you may choose to trade quality for speed.

Not directly related this topic, but one of Martin's articles that the above one on architecture links to is about how you cannot measure productivity of software development. That was back in 2003. Well just this week I read Accelerate: The Science of Lean Software and DevOps: Building and Scaling High Performing Technology Organizations by Nicole Forsgren, Jez Humble, and Gene Kim, the same folks behind Project Phoenix, and The State of DevOps Reports. So the intro is written by Martin, who finally explains his relief that these guys have scientifically explained using 5 years of research how to measure software productivity, and how to leads to business outcomes. Wow!

I quite like these ways of workings, and these definitions. They are so much more....human, and cater for different scenarios, rather than the text-book "though shall deliver 100% up-time" when we know its not practical, cost-efficient, or even required. And this goes for agile methodologies and the DevOps movement - making things so much more human and understandable, and saying up-front that its for each team to determine their own way of work, with no one-size-fits-all card.