Static Serverless Ghost

I've been running Ghost as a CMS for this blog since 2017, so almost 7

Telegram is a common messaging application, with over 500 million users. I've been using it daily since 2015, and I prefer it over WhatsApp due to many reasons: native PC/Mac applications (and not just a mobile app), cloud-based storage, the ability to run it with the same identity concurrently on multiple devices, an open API, and open-sourced clients. It has supported bots since 2015, which are third-party applications that run inside Telegram. Users can interact with bots by sending them messages, commands and inline requests. You control your bots using HTTPS requests to the Bot API.

A few months ago, I wrote TelegramTasweerBot to control images and videos sent on groups and channels. It can be used to control Personally identifiable information (PII), specifically images of people. The bot deletes any image that contains a face, and now deletes videos and emojis of faces as well.

Instead of using the Telegram Bot API myself, I used python-telegram-bot (PTB) which is a wrapper/framework in python that makes its easier to write Telegram bots in python.

Telegram bots can be run in two ways:

For the first few months, I ran the bot using polling (see the code). It ran as a python app, running on an AWS EC2 instance. The challenge here is make sure the bot is always running (I used monit), making sure the instance is always running, and deploying new changes to the code. You have to think about these things, and solve them. And the fact that the instance is running 24x7 means you paying for that all the time, even if the bot is not used. Thats why I wanted to move it to AWS Lambda - serverless computing, where your code only runs when its needed, and you only pay when it runs. And because you dont have to worry about the instance where the code runs, and the uptime and maintenance of it, means you dont have to think or worry about that Ops stuff. And the most important thing for me: deployment of the code is super easy To make it even easier, I've used AWS SAM to define and deploy the bot - SAM takes care of deploying all the AWS services required: API Gateway, Lambda, DynamoDB, and all logs in CloudWatch.

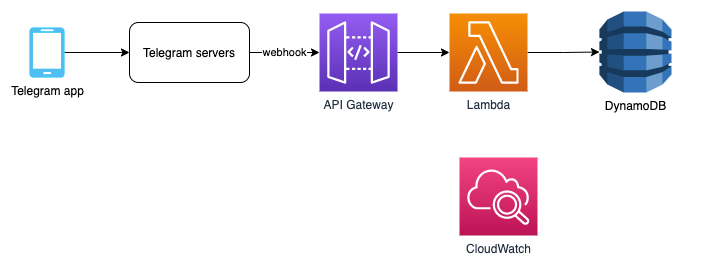

So since last week, TelegramTasweerBot now can be run on AWS Lambda as well: this is the Lambda handler, and this is the SAM template that defines it all. The architecture looks like this:

all it takes to build and deploy this in your own AWS account with your own bot token, is to clone/download this locally, run sam build && sam deploy and thats it - check out the README for more details.

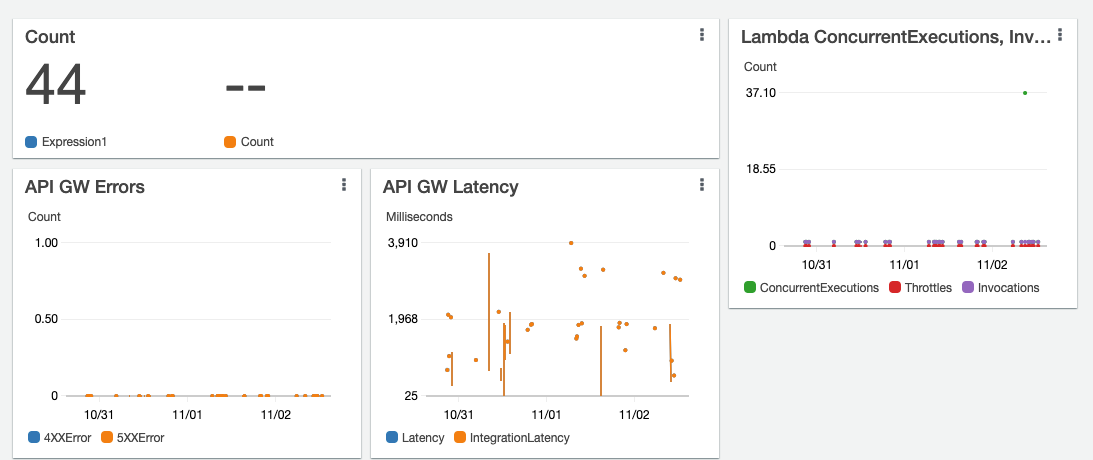

I can now get stats on how many times the bot was invoked, how long it ran, errors, etc, all from CloudWatch:

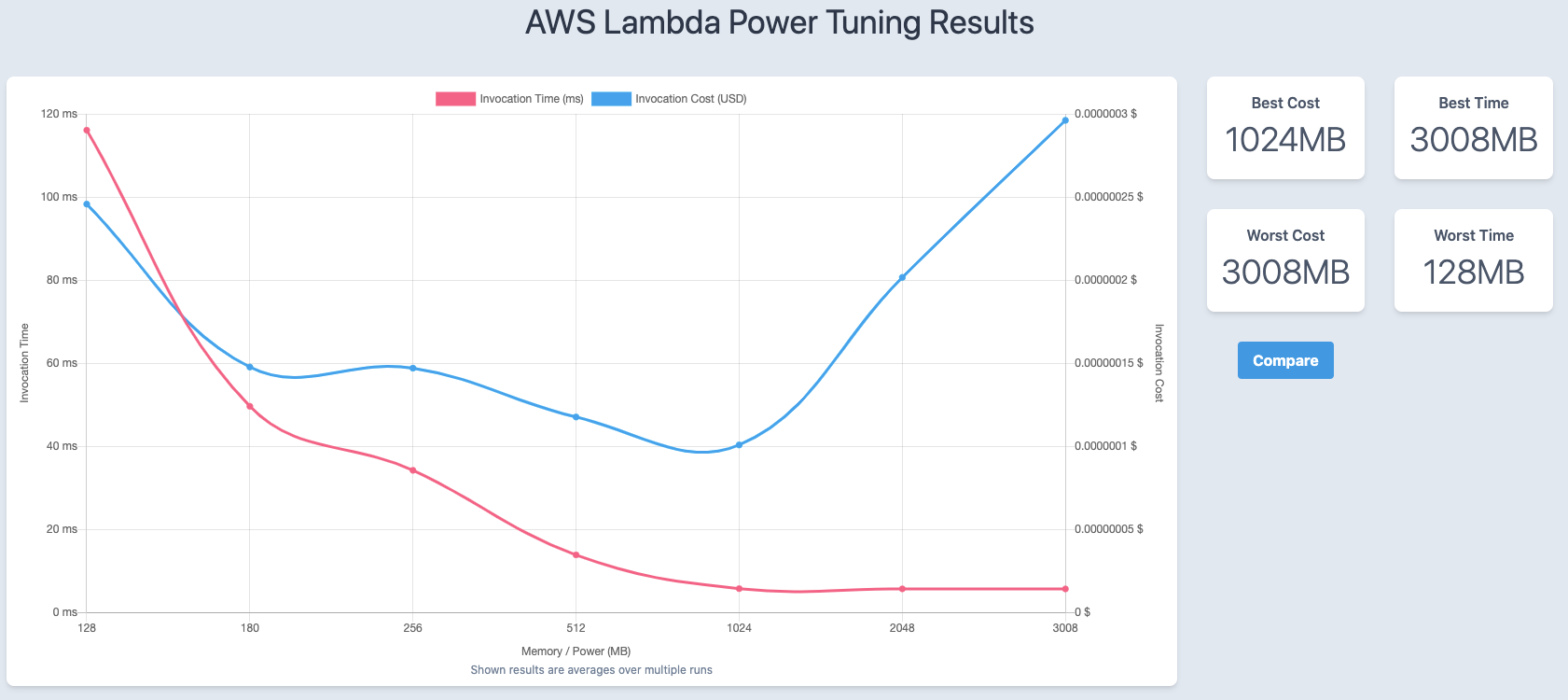

Lambda allows you to specify a specific amount of memory to a Lambda function, which dictates how it performance, and thus the cost as well. Whats interesting is there is a balance between performance and cost: if you allocate less RAM, the price will be cheaper, but it will run slower, and actually costs more, and the flip-side: it you allocate mote memory, it might be cheaper to run because it will run faster, even though the increased memory costs more. So there is actually a sweet spot you can target: the right amount of memory that makes your Lambda function run faster and cheaper. To help figure out what that sweet spot is, I used AWS Lambda Power Tuning to test different configurations, measure the running times, and calculate the cost of each run.

It takes just a few minutes to setup and run, and the results show that my bot runs fastest and cheapest with 1024MB of memory.